Programming and software development

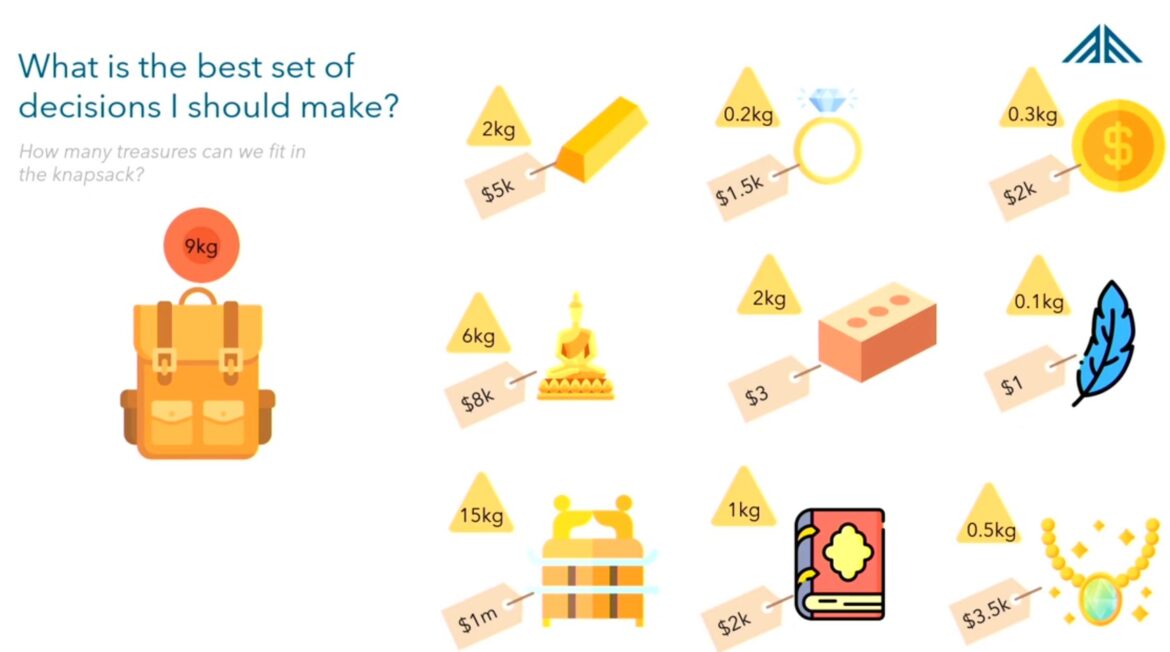

During my PyCon presentation on Mathematical optimisation, I used the example of the knapsack problem to walk people through the structure of a linear program. I’ve had a number of people request access to this code, so I thought I’d write it up in a post so it could be more easily shared. This will be a relatively concise article so feel free to refer to the presentation for any additional context.

What is the knapsack problem?

- You have a knapsack that can only carry so much weight (in our case 9 kg)

- Every item you can place in the knapsack has a weight and a value

- You want to maximise the value of the items you choose to put in the knapsack

- What is the most valuable mix of items you can choose?

Why use this example?

I decided to use this particular example because I wanted to illustrate how mathematical optimisation is perfect for solving problems where you have a large number of granular decisions to make whilst subject to constraints. Many industries face some version of these problems. While machine learning is fantastic for prediction/classification problems, mathematical optimisation was developed specifically to solve complex decision problems.

Let’s solve it in Python

Step 1: Import libraries and initialise a model object.

import gurobipy as gp

from gurobipy import GRB

m = gp.Model("knapsack")

Step 2: Create the model decision variables. These are the binary decisions (0 or 1) that determine whether we are selecting this item to go into the knapsack.

gold = m.addVar(vtype=GRB.BINARY, name="gold") diamond = m.addVar(vtype=GRB.BINARY, name="diamond") coin = m.addVar(vtype=GRB.BINARY, name="coin") statue = m.addVar(vtype=GRB.BINARY, name="statue") brick = m.addVar(vtype=GRB.BINARY, name="brick") feather = m.addVar(vtype=GRB.BINARY, name="feather") ark = m.addVar(vtype=GRB.BINARY, name="ark") book = m.addVar(vtype=GRB.BINARY, name="book") necklace = m.addVar(vtype=GRB.BINARY, name="necklace")

Step 3: Create the weight constraint – in this case the backpack cannot hold more than 9 kg of objects.

backpack_max_weight = 9

m.addConstr(2 * gold +

0.2 * diamond +

0.3 * coin +

6 * statue +

2 * brick +

0.1 * feather +

15 * ark +

1 * book +

0.5 * necklace <= backpack_max_weight,

name="max_weight")

Step 4: Create the objective function of the model – we want to maximise the value of the objects we select.

m.setObjective(

5000 * gold +

1500 * diamond +

2000 * coin +

8000 * statue +

3 * brick +

1 * feather +

1000000 * ark +

2000 * book +

3500 * necklace,

GRB.MAXIMIZE)

Step 5: Run the model and extract the variables and their optimal values.

m.optimize()

for v in m.getVars():

print(v.VarName, v.X)